Android XR, Google Glasses and What We Call Things We Put on Our Face

Google’s recent I/O event brought an array of announcements, mostly focused on AI. However, near the end of the keynote, Google turned to Android XR, providing an update on its spatial computing platform and running a live demonstration on a pair of smart glasses.

Shahram Izadi, who leads Google’s efforts on extended reality (XR), said: “Glasses with Android XR are lightweight and designed for all-day wear, even though they’re packed with technology. A camera and microphones allow Gemini to see and hear the world. Speakers let you listen to the AI, play music or take calls. And an optional in-lens display privately shows you helpful information, just when you need it”.

There’s a noteworthy point to unpack here. Android XR is designed to work on devices without a visual element, which simultaneously makes a lot of sense from a strategic perspective and no sense at all from a technical one.

“XR” typically refers to devices offering a visual experience — something you can look at, be it augmented, mixed or virtual reality. Saying that Android XR will work on a device with no visual element is like saying that Android Auto will work on a skateboard. Just because a skateboard has four wheels doesn’t make it a car; in the same way, just because it’s a pair of glasses doesn’t mean it’s offering an XR experience.

Semantics aside, this means that the core element of Android XR — Google’s Gemini AI assistant — will be coming to glasses without built-in displays, meaning there should soon be Android devices to rival the Ray-Ban Meta offering. To this end, Google’s partnerships with Gentle Monster and Warby Parker, announced at I/O, are strong steps in building smart glasses with aesthetic and functional appeal.

This matters because there’s a sense that smart glasses — and AI-powered wearables more broadly — are becoming devices that people want to wear and use regularly. Meta’s glasses partner, EssilorLuxottica, says that over 2 million pairs of connected Ray-Bans have been sold, indicating good momentum. Google won’t want to let Meta get too far ahead, hence the decision to jump into the market.

But smart glasses have to strike a fine balance between comfort, functionality, user-friendliness and aesthetics. Many attempts have failed on one or more of these metrics, especially when adding a display. It adds a huge amount of complexity, reduces battery life and amplifies issues such as thermal management. The closest I’ve seen to success with built-in displays have been Meta’s Project Orion smart glasses, which I tried in late 2024, and Google’s smart glasses, which I tried at I/O.

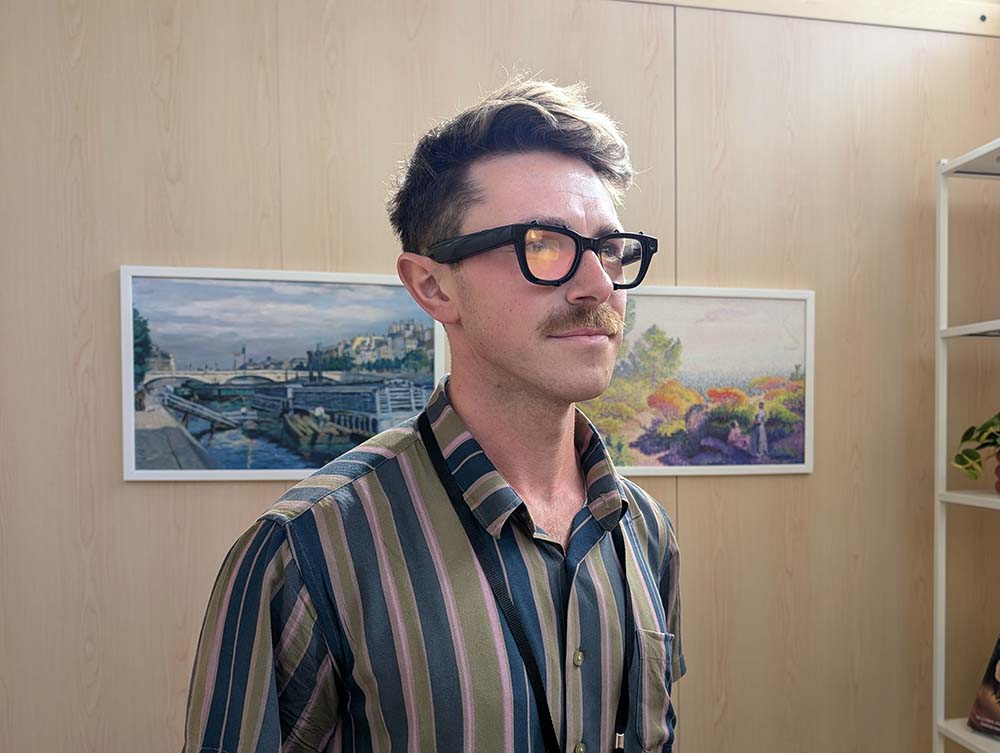

I ran through a quick demo wearing the Google glasses, in which I interacted with the Gemini AI assistant through voice commands. I asked questions about things I could see in the room around me — for example, I asked about a painting on the wall — and engaged in natural conversation with follow-up questions. The visual element came to life when I asked for directions to a location. Looking down and seeing a 3D map, which pivoted in real time as I did, was an impressive experience.

The demo was short but impressive. The glasses are lightweight and comfortable, and Google even kitted me out with custom prescription lenses with only a moment’s notice. This is critical given that glasses wearers are a natural audience for smart glasses. Like the Ray-Ban Meta glasses, the device felt like a normal pair of eyewear with some very cool features. And it certainly feels like glasses are a natural home for multimodal AI agents like Gemini, given their ability to see and hear your surroundings more easily than a smartphone ever could.

Still, it’s worth noting that Google’s glasses are a concept — the company hasn’t promised to launch its own device yet. Instead, it plans to continue its partnership with Samsung to offer smart glasses.

What’s curious is that during the I/O event, Google and Xreal announced a strategic partnership. Xreal unveiled Project Aura, described as a “next-generation XR device”, “an optical see-through device” and a “lightweight and tethered, cinematic and Gemini AI-powered device”.

What wasn’t it described as? Glasses.

Interestingly, nowhere in Xreal’s press release is the word “glasses” used. It feels like Google is keen to place Project Aura firmly into the “optical see-through headset” category shown in its segmentation below, and not one of the “glasses” segments.

I sense there’s a push at Google to retain the word “glasses” for a specific category of devices — extremely lightweight eyeglasses, which will probably support a lightweight augmented reality experience. But that’ll be tricky when devices like Project Aura exist, and — let’s be honest here — look a lot like glasses.

There are few available details about the specs of this device (pun intended), but we should learn more at the upcoming Augmented World Expo, where Xreal has a keynote slot.

Does this debate over the definition of glasses really matter? Perhaps not — we’re still in the early innings of smart glasses as a category, and it takes time for boundaries between product types to settle. But given some of the tortured efforts we’ve seen in the virtual and mixed reality market to articulate what different headsets can and can’t do, it looks like smart glasses could be heading down the same slippery slope. A lack of marketing clarity doesn’t help in a segment already defined by “you need to see it to believe it”.

More vexing is whether people want glasses with displays or whether the Ray-Ban Meta glasses are succeeding because of their stripped-back approach. I’ve long felt that the appeal of Meta’s glasses lies in their ability as a wearable camera more than anything else, with AI assistance adding a useful secondary capability rather than a main reason to buy a pair. It still isn’t proven that people want to buy and wear smart glasses that will be far more invasive.

Regardless, 2025 will be a big year for head-worn devices. With Samsung’s Project Moohan slated to launch, Meta expected to unveil new glasses with displays and Android XR gearing up, there’s plenty to keep an eye on. Looking further ahead, recent reports suggest that Apple may have a pair of glasses in the works to debut as soon as next year.

Having tried Google’s prototype glasses, I’m impressed by the design progress, but my experience of waiting for smart glasses to come to market tells me it’s a long road ahead. Getting the balance right between design, usability and comfort is no mean feat, and then there’s convincing buyers to open their wallets. Let’s see if they will.

LinkedIn

LinkedIn

Email

Email

Facebook

Facebook

X

X